Hi, I'm Sander!

Hi, my name is Sander Staal and I welcome you to my personal website. Since the beginning of 2020, I'm working as a software engineer at Google in the YouTube data team. Previously, before joining Google, I worked as a research assistant at the Perceptual User Interfaces Group, University of Stuttgart (Germany), and at the Distributed Systems Group, ETH Zürich (Switzerland).

During my research time, I worked on perceptually optimized user interface notifications and the quantification of visual attention during everyday mobile phone interactions. I obtained my Master's (2019) and Bachelor's degree (2017) in computer science at ETH Zurich.

Interests

Distributed Systems

No matter how powerful individual systems and CPUs become, there will always be a need to organize and manage large-scale distributed systems. This creates a large range of interesting question, like how to optimize distributed computations, how to design communication models between these systems or how to find new ways and algorithms to better organize and process large-scale datasets.

Eye tracking

As smart devices become more ubiquitous, eye gaze can enhance the way in which we interact with objects around us. Many applications can benefit from knowing where the user is currently looking at. Together with other similar aspects from computer vision, like for example scene understanding or object detection, we can create new systems and applications which assist humans in their daily life.

Ubiquitous Computing

With the ever-increasing number of devices we use within our daily life, we can largely improve the way we interact with them. With new technologies in the fields of wireless communication, wearable computing or location-awareness, we can make them much more aware of their surroundings and context.

Machine Learning

With the rise of major new aspects in machine learning during the last 15 years, such as reinforcement learning or the increasing number of neural networks, we can apply these new concepts in combination with computer vision and image processing to create new and innovative systems.

Publications

-

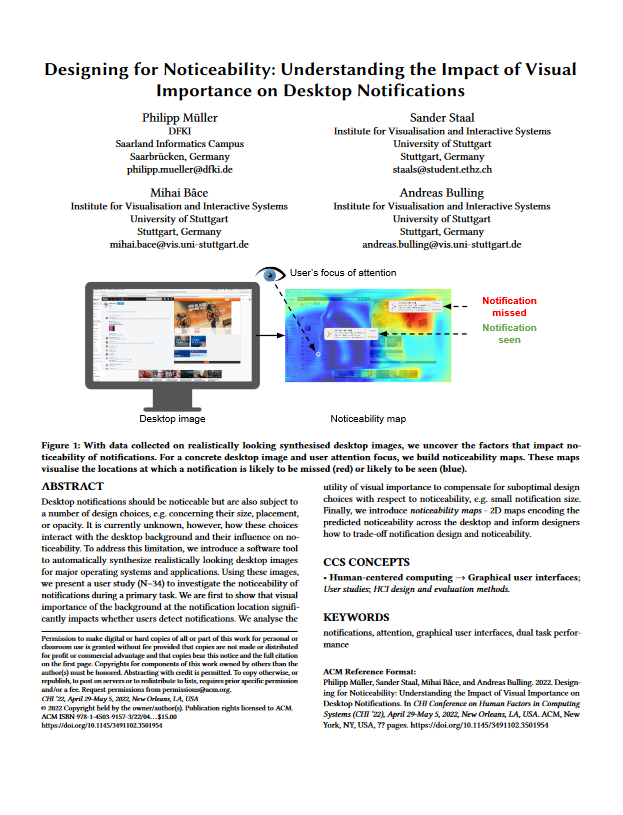

Designing for Noticeability: The Impact of Visual Importance on Desktop Notifications

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), 2022

Philipp Müller, Sander Staal, Mihai Bâce, Andreas BullingDesktop notifications should be noticeable but are also subject to a number of design choices, e.g. concerning their size, placement, or opacity. It is currently unknown, however, how these choices interact with the desktop background and their influence on noticeability. To address this limitation, we introduce a software tool to automatically synthesize realistically looking desktop images for major operating systems and applications. Using these images, we present a user study (N=34) to investigate the noticeability of notifications during a primary task. We are first to show that visual importance of the background at the notification location significantly impacts whether users detect notifications. We analyse the utility of visual importance to compensate for suboptimal design choices with respect to noticeability, e.g. small notification size. Finally, we introduce noticeability maps - 2D maps encoding the predicted noticeability across the desktop and inform designers how to trade-off notification design and noticeability.

-

Quantification of Users' Visual Attention During Everyday Mobile Device Interactions

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), 2020

Mihai Bâce, Sander Staal, Andreas BullingWe present the first real-world dataset and quantitative evaluation of visual attention of mobile device users in-situ, i.e. while using their devices during everyday routine. Understanding user attention is a core research challenge in mobile HCI but previous approaches relied on usage logs or self-reports that are only proxies and consequently do neither reflect attention completely nor accurately. Our evaluations are based on Everyday Mobile Visual Attention (EMVA) – a new 32-participant dataset containing around 472 hours of video snippets recorded over more than two weeks in real life using the front-facing camera as well as associated usage logs, interaction events, and sensor data. Using an eye contact detection method, we are first to quantify the highly dynamic nature of everyday visual attention across users, mobile applications, and usage contexts. We discuss key insights from our analyses that highlight the potential and inform the design of future mobile attentive user interfaces.

-

How far are we from quantifying visual attention in mobile HCI?

IEEE Pervasive Computing, 19, pp. 46-55, 2020

Mihai Bâce, Sander Staal, Andreas BullingWith an ever-increasing number of mobile devices competing for our attention, quantifying when, how often, or for how long users visually attend to their devices has emerged as a core challenge in mobile human-computer interaction. Encouraged by recent advances in automatic eye contact detection using machine learning and device-integrated cameras, we provide a fundamental investigation into the feasibility of quantifying visual attention during everyday mobile interactions. We identify core challenges and sources of errors associated with sensing attention on mobile devices in the wild, including the impact of face and eye visibility, the importance of robust head pose estimation, and the need for accurate gaze estimation. Based on this analysis, we propose future research directions and discuss how eye contact detection represents the foundation for exciting new applications towards next-generation pervasive attentive user interfaces.

-

Accurate and Robust Eye Contact Detection During Everyday Mobile Device Interactions

arXiv, 2019

Mihai Bâce, Sander Staal, Andreas BullingQuantification of human attention is key to several tasks in mobile human-computer interaction (HCI), such as predicting user interruptibility, estimating noticeability of user interface content, or measuring user engagement. Previous works to study mobile attentive behaviour required special-purpose eye tracking equipment or constrained users' mobility. We propose a novel method to sense and analyse visual attention on mobile devices during everyday interactions. We demonstrate the capabilities of our method on the sample task of eye contact detection that has recently attracted increasing research interest in mobile HCI. Our method builds on a state-of-the-art method for unsupervised eye contact detection and extends it to address challenges specific to mobile interactive scenarios. Through evaluation on two current datasets, we demonstrate significant performance improvements for eye contact detection across mobile devices, users, or environmental conditions. Moreover, we discuss how our method enables the calculation of additional attention metrics that, for the first time, enable researchers from different domains to study and quantify attention allocation during mobile interactions in the wild.

-

Wearable Eye Tracker Calibration at Your Fingertips

ACM Symposium on Eye Tracking Research & Applications (ETRA 2018)

Mihai Bâce, Sander Staal, Gábor SörösCommon calibration techniques for head-mounted eye trackers rely on markers or an additional person to assist with the procedure. This is a tedious process and may even hinder some practical applications. We propose a novel calibration technique which simplifies the initial calibration step for mobile scenarios. To collect the calibration samples, users only have to point with a finger to various locations in the scene. Our vision-based algorithm detects the users’ hand and fingertips which indicate the users’ point of interest. This eliminates the need for additional assistance or specialized markers. Our approach achieves comparable accuracy to similar markerbased calibration techniques and is the preferred method by users from our study. The implementation is openly available as a plugin for the open-source Pupil eye tracking platform.

-

Collocated Multi-user Gestural Interactions with Unmodified Wearable Devices

Augmented Human Research (2017)

Mihai Bâce, Sander Staal, Gábor Sörös, Giorgio CorbelliniMany real-life scenarios can benefit from both physical proximity and natural gesture interaction. In this paper, we explore shared collocated interactions on unmodified wearable devices. We introduce an interaction technique which enables a small group of people to interact using natural gestures. The proximity of users and devices is detected through acoustic ranging using inaudible signals, while in-air hand gestures are recognized from three-axis accelerometers. The underlying wireless communication between the devices is handled over Bluetooth for scalability and extensibility. We present (1) an overview of the interaction technique and (2) an extensive evaluation using unmodified, off-the-shelf, mobile, and wearable devices which show the feasibility of the method. Finally, we demonstrate the resulting design space with three examples of multi-user application scenarios.

-

HandshakAR: Wearable Augmented Reality System for Effortless Information Sharing

Augmented Human Research (2017)

Mihai Bâce, Gábor Sörös, Sander Staal, Giorgio CorbelliniWhen people are introduced to each other, exchanging contact information happens either via smartphone interactions or via more traditional business cards. Crowded social events make it more challenging to keep track of all the new contacts. We introduce HandshakAR, a novel wearable augmented reality application that enables effortless sharing of digital information. When two people share the same greeting gesture (e.g., shaking hands) and are physically close to each other, their contact information is effortlessly exchanged. There is no instrumentation in the environment required, our approach works on the users' wearable devices. Physical proximity is detected via inaudible acoustic signals, hand gestures are recognized from motion sensors, the communication between devices is handled over Bluetooth, and contact information is displayed on smartglasses. We describe the concept, the design, and an implementation of our system on unmodifed wearable devices.